I am Yu Zhang (张彧). Now, I am a Research Scientist at ByteDance. If you are seeking any form of academic cooperation, please feel free to email me at aaron9834@icloud.com.

I earned my PhD in the College of Computer Science and Technology, Zhejiang University (浙江大学计算机科学与技术学院), under the supervision of Prof. Zhou Zhao (赵洲). Previously, I graduated from Chu Kochen Honors College, Zhejiang University (浙江大学竺可桢学院), with dual bachelor’s degrees in Computer Science and Automation. I have also served as a visiting scholar at University of Rochester with Prof. Zhiyao Duan and University of Massachusetts Amherst with Prof. Przemyslaw Grabowicz.

My research interests primarily focus on Multi-Modal Generative AI, specifically in Spatial Audio, Music, Singing Voice, and Speech. I have published 10+ first-author papers at top international AI conferences, such as NeurIPS, ACL, and AAAI.

🔥 News

- 2025.10: 🎉 ASAudio and Synthetic Singers are accepted by AACL 2025!

- 2025.08: 🎉 MRSAudio is accepted by NeurIPS 2025!

- 2025.08: 🎉 VersBand is accepted by EMNLP 2025!

- 2025.08: 🎉 Conan is accepted by ASRU 2025!

- 2025.08: 💼 I join ByteDance as a research scientist!

- 2025.07: 🎉 ISDrama and MESA are accepted by ACM-MM 2025!

- 2025.06: 🎓 I earn my PhD in Computer Science from Zhejiang University!

- 2025.05: 🎉 TCSinger 2 and STARS are accepted by ACL 2025!

- 2025.04: 🏫 I come to the University of Rochester as a visiting scholar, working with Prof. Zhiyao Duan!

- 2024.09: 🎉 GTSinger is accepted by NeurIPS 2024 (Spotlight)!

- 2024.09: 🎉 TCSinger is accepted by EMNLP 2024!

- 2023.12: 🎉 StyleSinger is accepted by AAAI 2024!

📝 Publications

*denotes co-first authors

🔊 Spatial Audio

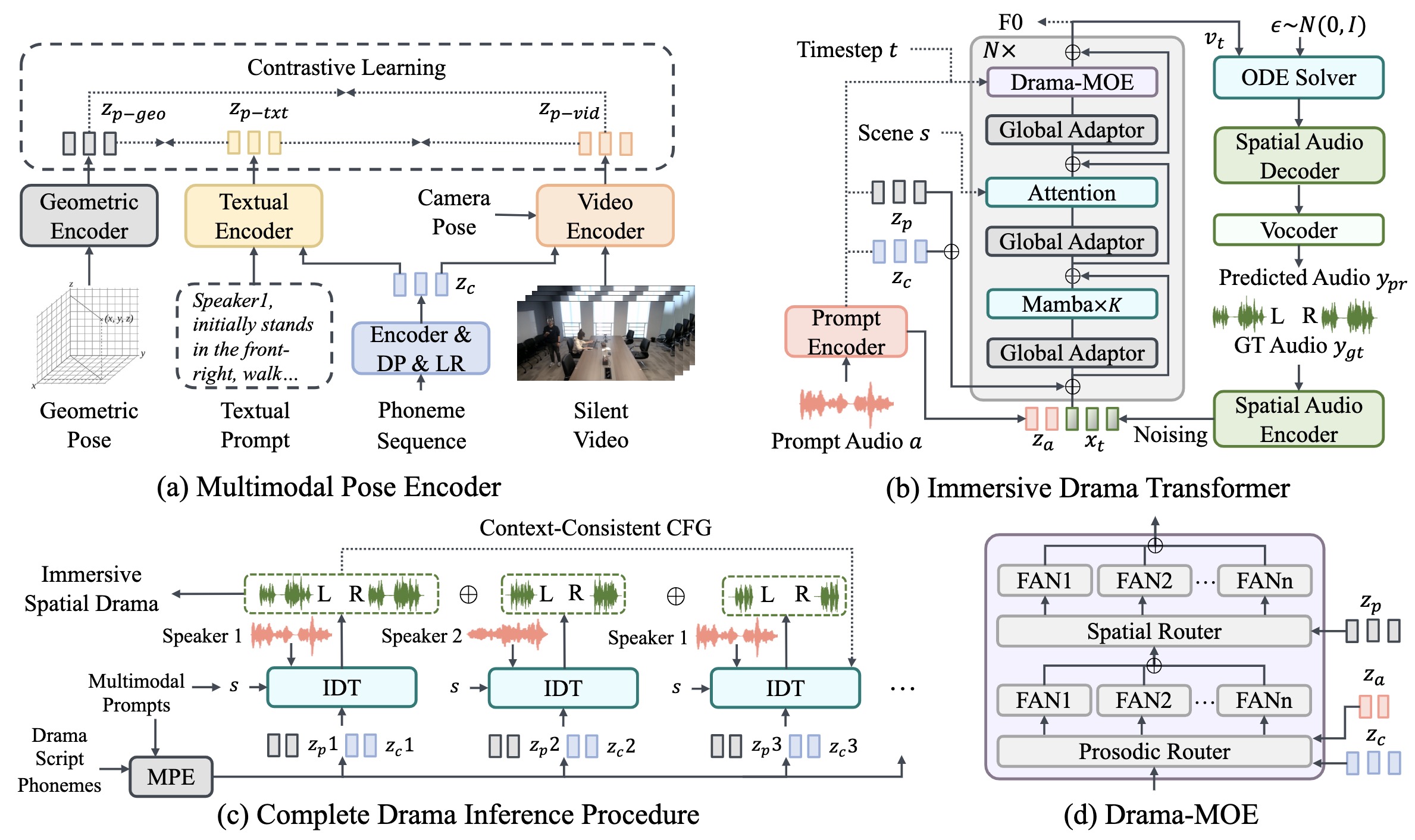

ISDrama: Immersive Spatial Drama Generation through Multimodal Prompting

Yu Zhang, Wenxiang Guo, Changhao Pan, et al.

- MRSDrama is the first multimodal recorded spatial drama dataset, containing binaural drama audios, scripts, videos, geometric poses, and textual prompts.

- ISDrama is the first immersive spatial drama generation model through multimodal prompting.

- Our work is promoted by multiple media and forums, such as

,

, and

.

AACL 2025ASAudio: A Survey of Advanced Spatial Audio Research, Zhiyuan Zhu*, Yu Zhang*, Wenxiang Guo*, et al. |ACM-MM 2025A Multimodal Evaluation Framework for Spatial Audio Playback Systems: From Localization to Listener Preference, Changhao Pan*, Wenxiang Guo*, Yu Zhang*, et al. |NeurIPS 2025MRSAudio: A Large-Scale Multimodal Recorded Spatial Audio Dataset with Refined Annotations, Wenxiang Guo*, Changhao Pan*, Zhiyuan Zhu*, Xintong Hu*, Yu Zhang*, et al. |

🎼 Music

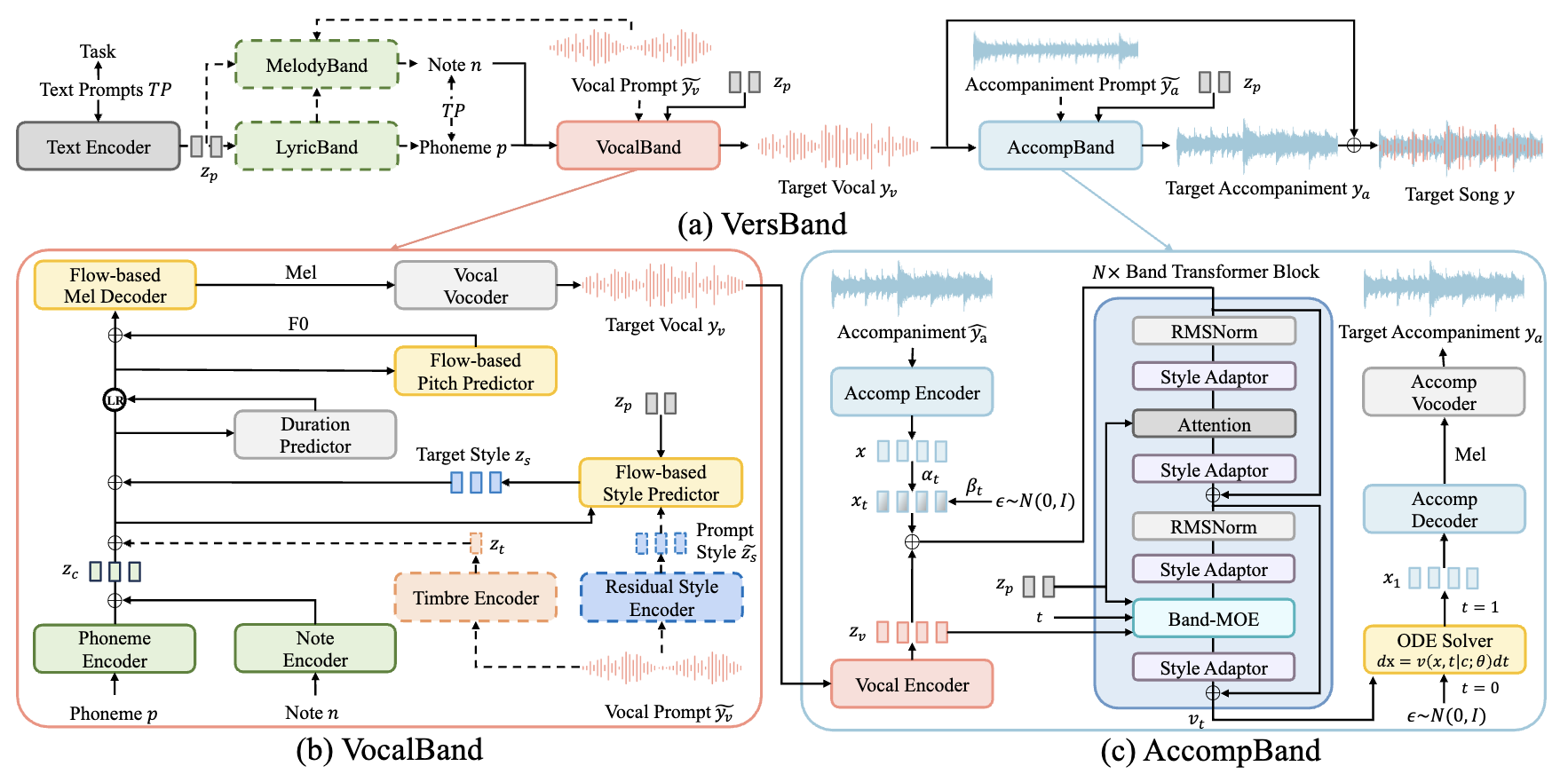

Versatile Framework for Song Generation with Prompt-based Control

Yu Zhang, Wenxiang Guo, Changhao Pan, et al.

🎙️ Singing Voice

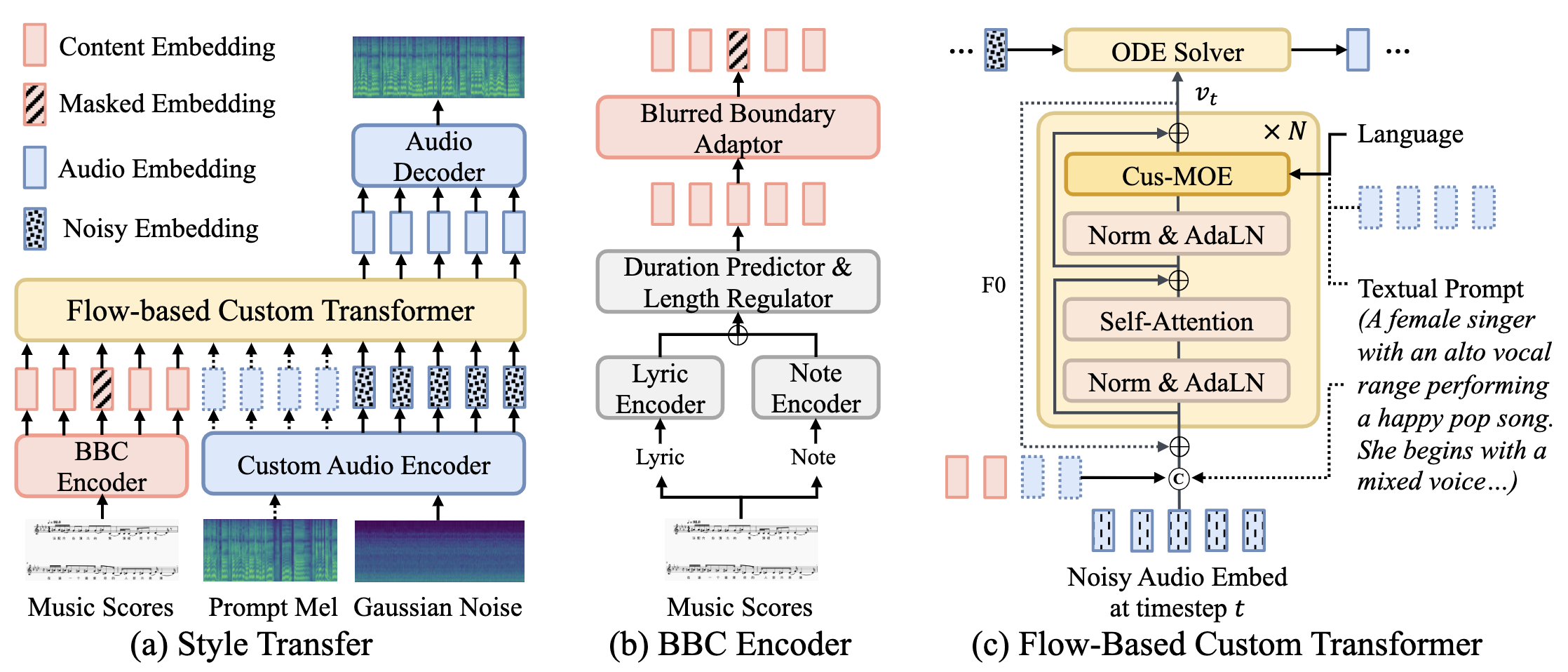

TCSinger 2: Customizable Multilingual Zero-shot Singing Voice Synthesis

Yu Zhang, Ziyue Jiang, Ruiqi Li, et al.

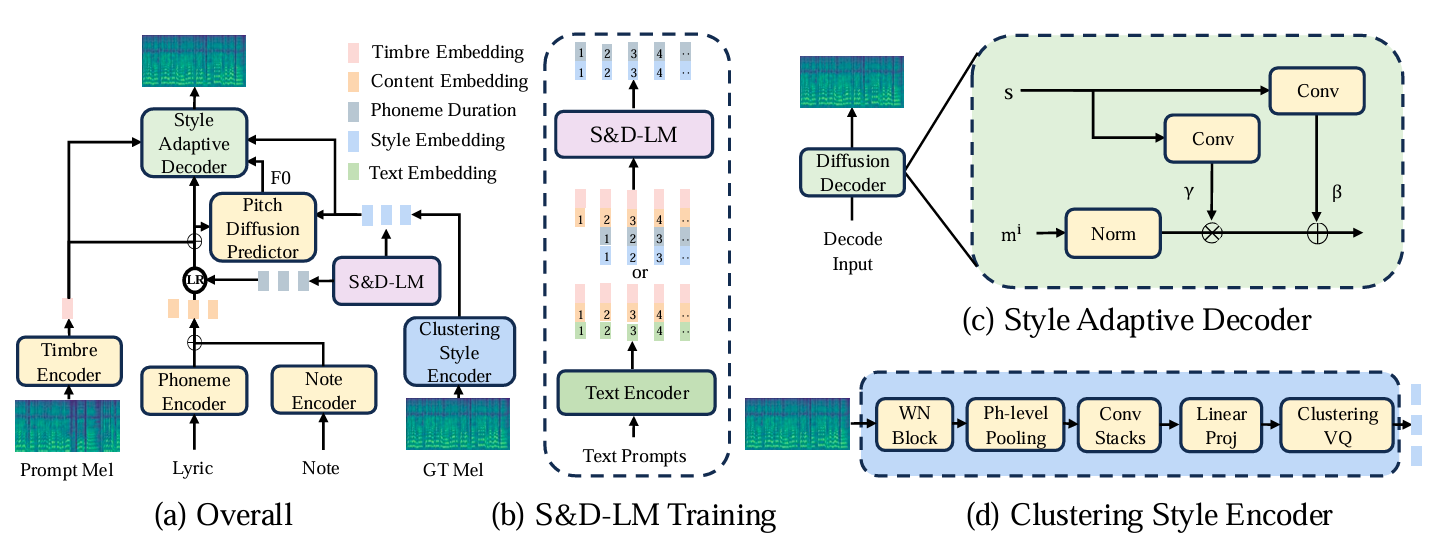

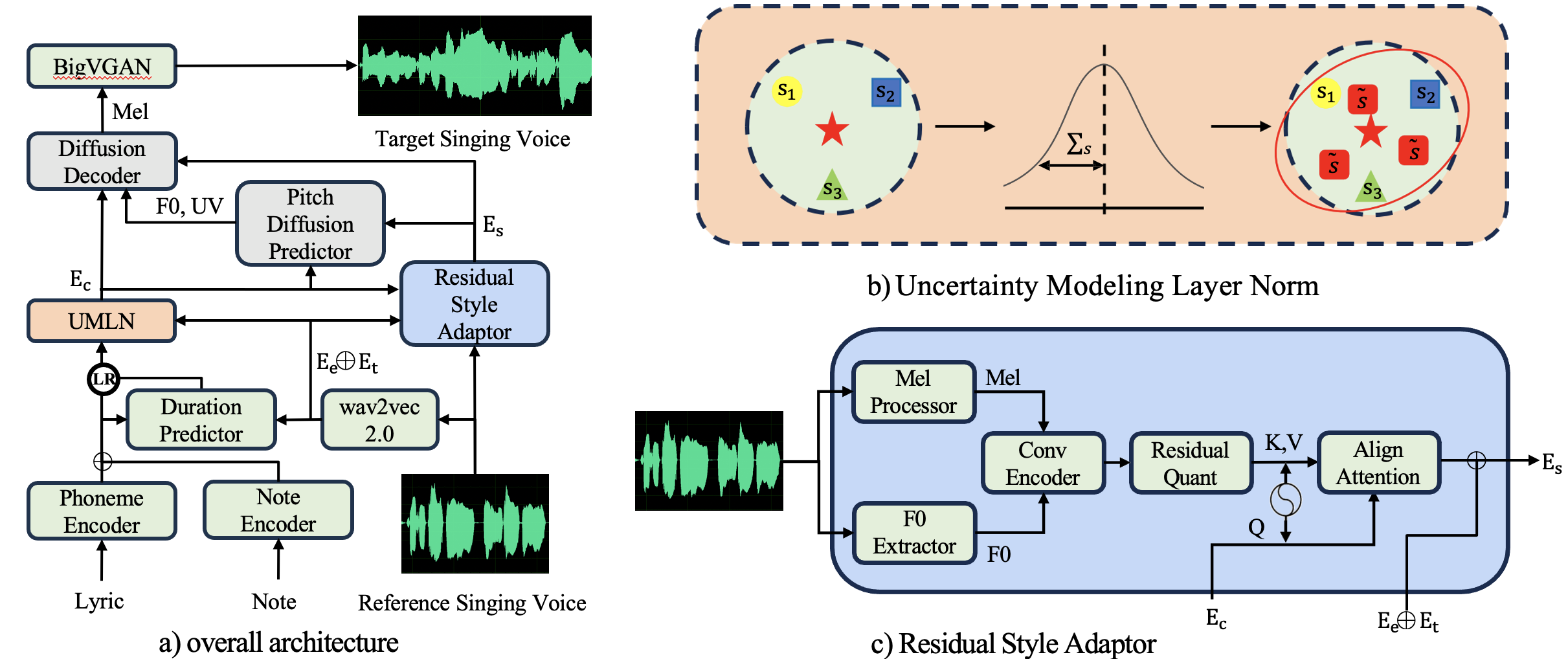

TCSinger: Zero-Shot Singing Voice Synthesis with Style Transfer and Multi-Level Style Control

Yu Zhang, Ziyue Jiang, Ruiqi Li, et al.

- TCSinger is the first zero-shot SVS model for style transfer across cross-lingual speech and singing styles, along with multi-level style control.

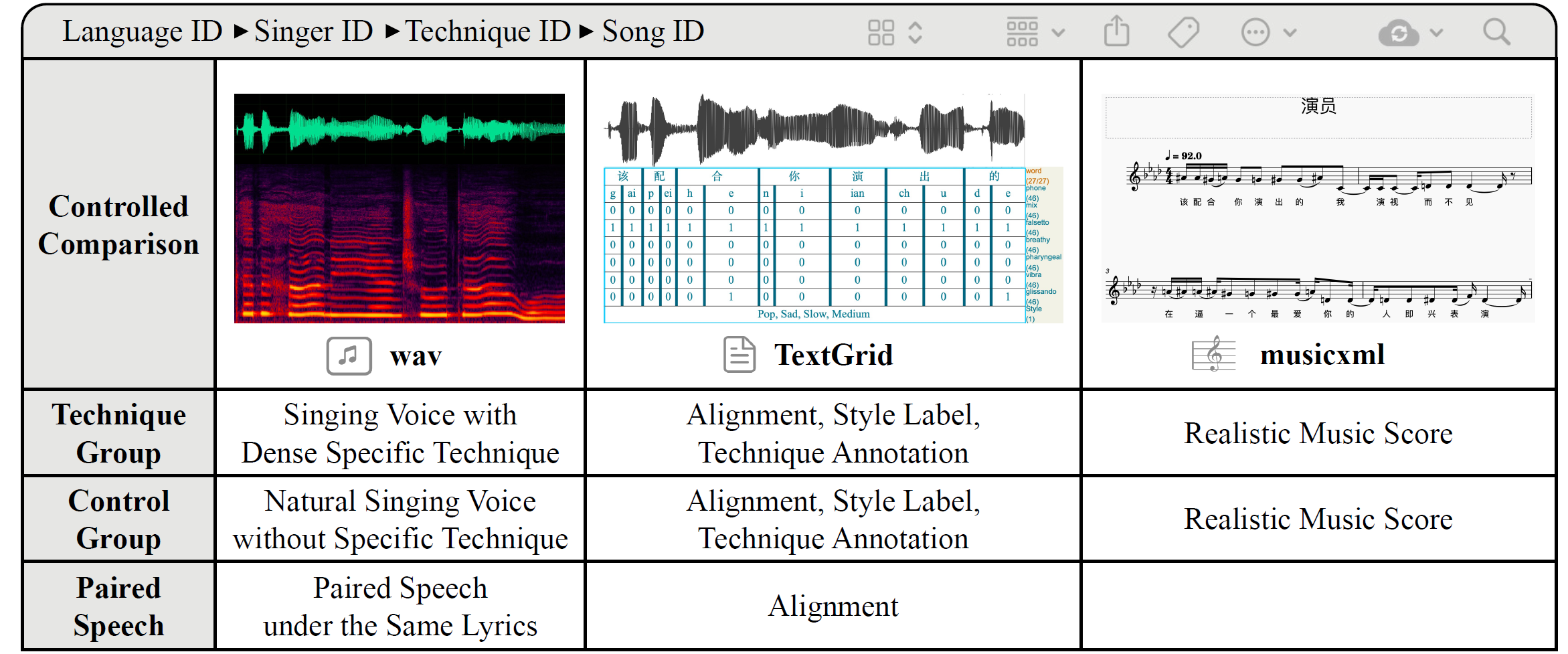

GTSinger: A Global Multi-Technique Singing Corpus with Realistic Music Scores for All Singing Tasks

Yu Zhang, Changhao Pan, Wenxinag Guo, et al.

StyleSinger: Style Transfer for Out-of-Domain Singing Voice Synthesis

Yu Zhang, Rongjie Huang, Ruiqi Li, et al.

- StyleSinger is the first singing voice synthesis model for zero-shot style transfer of out-of-domain reference singing voice samples.

ACL 2025STARS: A Unified Framework for Singing Transcription, Alignment, and Refined Style Annotation, Wenxiang Guo*, Yu Zhang*, Changhao Pan*, et al. |AACL 2025 OralSynthetic Singers: A Review of Deep-Learning-based Singing Voice Synthesis Approaches, Changhao Pan*, Dongyu Yao*, Yu Zhang*, et al. |AAAI 2025TechSinger: Technique Controllable Multilingual Singing Voice Synthesis via Flow Matching, Wenxiang Guo, Yu Zhang, Changhao Pan, et al. |ACL 2024Robust Singing Voice Transcription Serves Synthesis, Ruiqi Li, Yu Zhang, Yongqi Wang, et al. |

💬 Speech

ASRU 2025Conan: A Chunkwise Online Network for Zero-Shot Adaptive Voice Conversion, Yu Zhang, Baotong Tian, Zhiyao Duan. |PreprintMegaTTS 3: Sparse Alignment Enhanced Latent Diffusion Transformer for Zero-Shot Speech Synthesis, Ziyue Jiang, Yi Ren, Ruiqi Li, Shengpeng Ji, Zhenhui Ye, Chen Zhang, Bai Jionghao, Xiaoda Yang, Jialong Zuo, Yu Zhang, et al.

💡 Others

IJCAI 2025Leveraging Pretrained Diffusion Models for Zero-Shot Part Assembly, Ruiyuan Zhang, Qi Wang, Jiaxiang Liu, Yu Zhang, et al.

📖 Educations

- 2020.09 - 2025.06, PhD, Computer Science, College of Computer Science and Technology, Zhejiang University, Hangzhou, Zhejiang.

- 2016.09 - 2020.06, Dual BEng, Computer Science & Automation, Chu Kochen Honors College, Zhejiang University, Hangzhou, Zhejiang.

💻 Industrial Experiences

- 2025.08-Now, Research Scientist at ByteDance.

🔍 Research Experiences

- 2025.04 - 2025.06, Visiting Scholar at University of Rochester, working with Prof. Zhiyao Duan.

- 2020.06 - 2020.09, Research Intern at Alibaba-Zhejiang University Joint Institute of Frontier Technologies, working with Prof. Jianke Zhu.

- 2019.07 - 2020.01, Visiting Scholar at University of Massachusetts Amherst, working with Prof. Przemyslaw Grabowicz.

- 2018.09 - 2019.06, Research Assistant at Institute of Cyber-Systems and Control in Zhejiang University, working with Prof. Chunlin Zhou.

- 2018.09 - 2019.06, Research Assistant at Institute of Computer System Architecture in Zhejiang University, working with Prof. Chunming Wu.

🎖 Honors and Awards

- 2024.09, Outstanding PhD Student Scholarship of Zhejiang University.

- 2020.06, Outstanding Graduate of Zhejiang University (Undergraduate).

- 2019.09, First-Class Academic Scholarship of Zhejiang University (Undergraduate).

📚 Academic Services

- Conference Reviewer: NeurIPS (2024, 2025), ICLR (2025, 2026), CVPR (2026), ACL (2024, 2025), AAAI (2026), ACM-MM (2025), EMNLP (2024, 2025), AACL (2025), EACL (2026).

- Journal Reviewer: IEEE TASLP.